Sting SF 28 AI. A chess engine with unique abilities.

- Darius

- Nov 14, 2022

- 3 min read

Updated: Dec 11, 2023

Free, under the GPL 3 license with access to the source code. Sting is a chess engine based on one of the earlier versions of Stockfish 2.1.1 (released in 2011).

Sting can... sting... very aptly.

The author, Mr. Marek Kwiatkowski, has been developing Sting for many years into a tool for, among other things, solving very difficult chess problems, better understanding of uncountable positions (i.e. positions that even modern engines running on the most powerful computers cannot solve correctly), pointing out the most accurate moves / ideas related to the creation and overthrow of so-called chess fortresses.

In the ranking lists, the latest versions of this engine can be found in vain. The author's goal was to systematically increase the "IQ" of this engine as a problem-solving ability rather than strength.

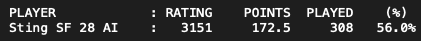

Source: MCERL (ongoing)

As part of the MCERL ranking games, I decided to give the latest version of Sting a chance. After playing 308 games, the Sting SF 28 AI achieved a ranking of 3151 Elo.

Is it a lot or is it a little ?

If we compare the strength of Sting with that of Stockfish 2.1.1, on which Sting is based, it comes out that Sting is stronger by 59 Elo points.

Source: CCRL

And compared to the latest official version of Stockfish 15, the difference already reaches several hundred Elo in favor of Stockfish 15.

The feature that sets the Sting engine apart is its efficiency in solving problems that state-of-the-art chess engines cannot cope with.

Here are some examples of positions in which the modern chess engines fail.

Test platform: MacBook Pro M1, Hiarcs Chess Explorer Pro GUI, 7 Threads and 1GB hash table for Sting chess engine.

Position #1, white's move, draw.

We start with something simple for humans, but not at all obvious for chess engines. White has a material advantage in the form of Bishop. Despite this, the position is equal and should be judged as such by chess engines.

Sting found the correct move immediately and came to the correct position evaluation after 3 seconds to think.

Position #2, white's move, draw.

Here we already see a much more difficult setup on the chessboard. White can achieve a draw despite the fact that black will obtain the Queen. Sting "sees" the correct continuation 2 seconds after the start of the analysis.

Position #3, white's move, white wins.

And here is a position that has remained unsolved by chess engines for many years. Extremely high level of difficulty. The solution is "unintuitive" and requires a lot of maneuvering before promoting the pawn to the Queen. Most modern engines, after starting to analyze this position, are unable to find the correct solution or take hours to reach a solved.

Sting takes three minutes to find the right first move and four minutes to pinpoint the continuation leading to white's victory.

Position #4, white's move, draw.

Probably a position of the kind most difficult for chess engines. White has the loss of having a weaker figure (Bishop instead of Rook) and cannot avoid further material losses. A draw is possible by setting up a so-called fortress. White can set up a fortress by making various moves, while the result will be the same: a draw, as black will not be able to overthrow the fortress despite a large material advantage.

As of the date of this post, only the Sting SF 28 AI chess engine was able to show that white could achieve a draw in this position.

The position into which the whites fortify themselves looks like this:

Sting can... sting by pointing out solutions improbable to humans and even more improbable to some chess engines.

I am sure that Sting can be a great addition to the chess workshop as a chess engine for special tasks.

I would like to invite you to download and test Sting SF 28 AI in the most difficult and interesting chess positions.

Android – Compiled by Archimedes

Linux ARM & Intel – Compiled by Darius

Mac Apple Silicon & Intel – Compiled by Darius

Windows – Compiled by Marek Kwiatkowski

Great!

An ancestor of crystal, in short